How serverless security shifts to the left

Bron: artikel integraal overgenomen van medium.com

Origineel auteur: Ike Gabriel Yuson

Imagine not managing and provisioning servers. Imagine only focusing on deploying code and not worrying about infrastructure.

The benefits of serverless might arguably abstract away a whole operations team. With this allure, however, it’s easy to think that serverless is secure right out of the box. But like any other type of architecture, security measures still need to be implemented. However, as new people deep-dive into serverless and how to secure it, as well as discovering how much operational overhead it abstracts, there’s one question that lingers in every beginner’s mind:

What is there to secure?

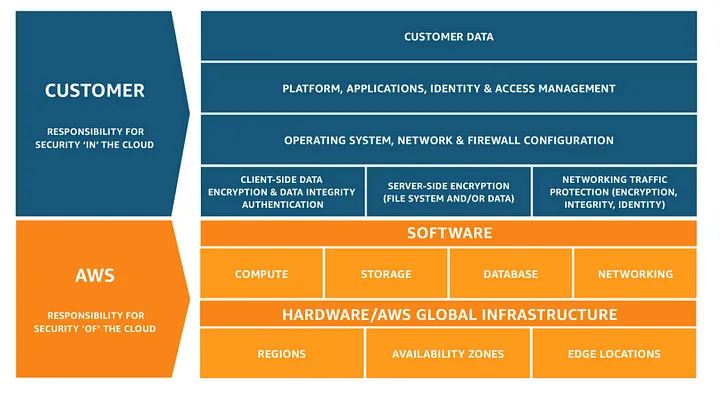

The Shared Responsibility Model

If you have been studying for AWS certifications, then this model might be familiar to you. The AWS Shared Responsibility Model is a framework that defines the responsibilities of AWS and its customers in managing and securing cloud environments. AWS is responsible for the security OF the cloud whereas the customer, on the other hand, is responsible for the security IN the cloud.

Take for example spinning up an EC2 instance. AWS is responsible for its physical security by managing and maintaining the security of the data centers on which your EC2 instances are physically hosted. Additionally, they also manage the hardware of these services like maintaining and updating their corresponding infrastructure. Furthermore, AWS is responsible for complying with various industry and regulatory standards for their data centers and services.

On the other hand, you, the customer, are responsible for maintaining the operating system of your EC2 instances. This includes OS security patches, anti-virus software, and other security-related configurations. Additionally, you are responsible for its data security as well. Implementing encryption at rest and encryption in transit is your responsibility, not AWS. You are also responsible for setting up firewalls like security groups and NACLs (network access control lists). This includes using IAM to control who can access your AWS resources and how they can interact with them.

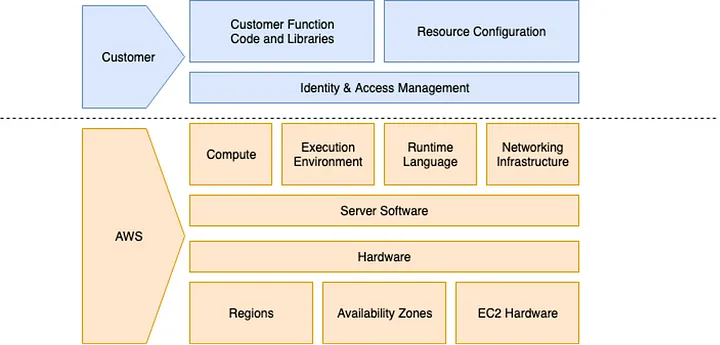

With serverless, however, this traditional Shared Responsibility Model changes. To further understand, see the illustration below:

Although this Shared Responsibility Model is actually AWS Lambda’s Shared Responsibility Model, it fully encompasses what certain responsibilities serverless offloads to the customer. In serverless, AWS is now responsible for maintaining the underlying operating systems and networking infrastructure of their serverless services such as AWS Lambda and Amazon S3.

Additionally, AWS is the one that handles the scaling as well as the maintenance of the physical servers of which these serverless services utilize under the hood. The few things that the customer is responsible for, on the other hand, are the following: code security, application configuration, IAM roles and permissions, data security, and monitoring and logging.

In a nutshell, the following are the key differences of the traditional Shared Responsibility model and the serverless Shared Responsibility Model:

- Less Overhead for Customers — Customers don’t manage the operating system, network configurations, or the physical servers. This reduces the administrative burden and allows them to focus more on application development.

- Increased AWS Responsibilities — AWS has more responsibilities in managing the security and maintenance of the infrastructure, which can lead to better standardization and potentially higher security benchmarks, since AWS experts manage these aspects. Lastly,

- Security Focus Shifts to Code and Data — In serverless, the primary focus for customers shifts towards securing the application code and managing the data security, given that AWS handles much of the infrastructure security.

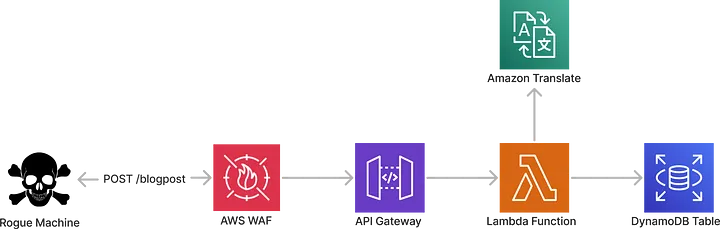

The Security Shift to the Left

The “shift to the left” basically means that with serverless, security shifts towards the developers—the builders of the application. Now before people in operations rejoice, this does not necessary offload the whole security responsibility of the operations team. Enforcing rate limiting, with AWS WAF and SQS as well as defending against Denial of Service attacks are still the operations team’s responsibility, even in serverless.

As stated above, serverless security shifts to securing application code— this is the responsibility of the developers. Take for example this code block below:

def lambda_handler(event, context):

id = event['body']['id']

user = f"SELECT * FROM users WHERE id = {id}"

return user

Experienced developers would automatically see what’s wrong with this block of code. This is actually a vulnerability called SQL injection. You may laugh and say that this kind of vulnerability is somewhat outdated and that there are already some technological advancements that counter this at the infrastructure level. With serverless, however, all these common attacks before then gain popularity once more because the responsibility for managing security shifts significantly towards the application code itself.

What if a bad actor is able to send a request body like the example below to your serverless API?

{

"id": "1; DROP TABLE users;"

}

Scary right? In a serverless environment, developers may automatically assume that security at the infrastructure level handled by the cloud provider is sufficient to protect against such vulnerabilities. This oversight can lead to neglecting security best practices at the application level. Thus, vulnerabilities like SQL injection, which might seem outdated in other contexts, can resurface with greater impact in serverless applications unless developers are diligent in implementing secure coding practices.

What about using ORMs?

Using ORMs is one way of countering vulnerabilities like SQL injection. However, it is essential to consider that these third-party ORM packages can also have their share of vulnerabilities. Therefore, not only is it the developer’s job to write secure code, but it is also their responsibility to thoroughly check the third-party packages they are using.

Developers should ensure they are using reputable sources and maintaining up-to-date versions of these libraries. Regularly performing security audits and subscribing to vulnerability alerts related to these packages can help mitigate potential risks. By proactively managing these dependencies, developers can significantly reduce the attack surface of their applications.

IAM Privilege Escalation

In developing serverless applications, it is required to use Infrastructure as Code (IaC) solutions. This is not an optional. Whether you are using the Serverless Framework, AWS CDK, or even Terraform as your IaC tool of choice, it is crucial to understand how these tools contribute to both the efficiency and security of your serverless deployments. With serverless, Infrastructure IS Code.

Using IaC tools like the Serverless Framework or AWS CDK enables you to integrate security best practices directly into the deployment process. Especially setting up the IAM execution roles of your Lambda functions. If you already have any experience working on a completely serverless project in AWS, you might notice that it’s the developer’s responsibility to define the all of the execution roles of each Lambda function.

Take for example the execution role below:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "*",

"Resource": "*"

}

]

}

Some people think that whoever uses this IAM policy as a Lambda execution role of one of their Lambda functions is out of their mind. This execution role is basically giving a Lambda function admin access which is susceptible to a IAM privilege escalation attack.

What if a bad actor is able to access your Lambda function and at the same time, sniff out it’s corresponding execution role? The bad actor now has the power to create an IAM user through this Lambda function and use its corresponding credentials to infiltrate your AWS account. Scary isn’t it? This is without a doubt possible if developers are not diligent enough to practice least privilege in formulating execution roles.

In another angle, this example illustrates the another concept of “shifting security to the left,” which emphasizes the importance of integrating security measures early in the development process rather than addressing them as an afterthought at the end.

What is there to secure?

Now, going back to the question at the start of this article, “What is there to secure?” it becomes clear that in serverless architectures, every layer from the code to configurations carries potential vulnerabilities that need vigilant protection. As we have explored, the allure of serverless technology does not magically eliminate the need for security. Rather, it reshapes where and how security practices are implemented.

Serverless, while offering scalability and efficiency, also demands a heightened focus on security at the application level. Developers are not just coding; they are essentially building the fortress walls for their operations in real-time. This requires an important approach to secure coding practices, diligent management of third-party libraries, and meticulous crafting of access and execution roles.

Moreover, embracing serverless is part of a broader paradigm shift, where operational responsibilities and traditional roles are redefined. Security is no longer a responsibility of a specific team but a recurring aspect of every stage of application development and deployment. Organizations must foster a culture of security that transcends traditional roles. Operations teams must collaborate closely with developers to implement effective monitoring and incident response. This integrated approach ensures that security is not just a feature or an afterthought but a fundamental aspect of the serverless deployment process.

Embracing the “shift to the left” in security practices ensures that risks are managed proactively, not reactively. This proactive security mindset will be key to leveraging serverless technology to its full extent while minimizing risk and maximizing trust in the systems we depend on.